| Contact: | Student Consultation Hours: |

|---|---|

|

David Cooper West Chester University 25 University Avenue Room 142 West Chester, PA 19383 Voice: 610-436-2651 E-mail: dcooper   wcupa wcupa edu edu

|

Tuesdays and Thursdays: 10:00AM - Noon over zoom (or in

person by request 24 hrs in advance) Fridays: Noon-1:00PM in person Mondays and Wednesdays by appointment over zoom. |

Intelligent tutoring systems (ITS) currently make decisions based on the performance measures of the students. In addition to performance, a successful tutor needs to be able to keep the student interested in learning all that the tutor has to offer. To enable this, we are developing a sensor framework to capture emotional cues given by the student while interacting with the ITS.

The ITS that this system is initially targeted for is Wayang Outpost (now MathSpring), a Geometry Tutor. The sensor data will be used in conjunction with real time data about the students interaction with the ITS. This includes the student's correct or incorrect responses, the rate that the student is answering questions, and the difficulty of the question presented. By using these sources of data, the system will attempt to classify the affective state of the student, as well as predict the future state of the student. This classification and prediction can be used by the ITS to make decisions about interventions and the style or difficulty of questions and explanations that the tutor will give.

There are four sensor sources that have been integrated, a mouse equipped with 8 pressure sensors, a chair pad equipped with 6 pressure sensors and one 2d accelerometer, a wrist band with a skin conductance sensor, and a camera with affective detection software. This allows for a 23 feature vector recording at about 6 Hz.

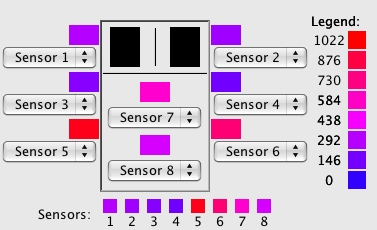

The Mouse has eight pressure sensors with values ranging from 0 to 1023. Though the range of values is high, the response time for changes is about one second, and in most cases there are only a few distinct levels of pressure. These levels differ for each person using the sensor. There are three sensors on each side and two sensors on the back as shown below.

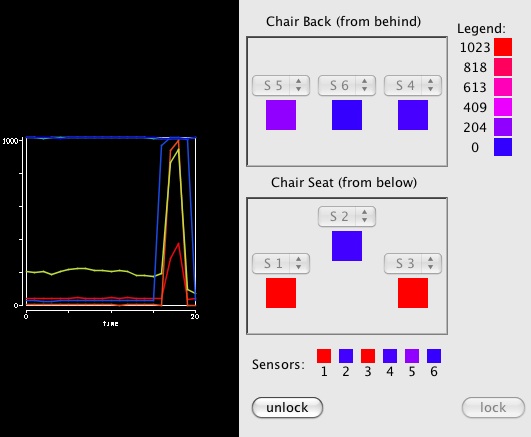

The Chair has six pressure sensors with values ranging from 0 to 1023. Three pressure sensors go along the middle of the back. This allows us to determine whether a student is leaning forward or back on the chair. It also shows which side the student is leaning on. The other three pressure sensors are on the seat. There are two in the front of the seat on either side, and one in the center back of the chair. This gives us an idea of whether the student is on the edge of their seat or fully seated in the chair. If the student is squirming in the chair the pressures will be changing in at least one of these six places. In addition there is an accelerometer on the back cushion of the chair which allows us to determine if a student is leaning the chair back backwards.

The wrist band has a skin conductance sensor and an active RFID tag using the IEEE 802.15.4 protocol. The tag reports the battery voltage, a bias voltage for the sensor, and an output voltage for the sensor. Skin conductance is computed using these three values, which are reported once a second. Empirically, skin conductances of the wrist band range from 0.0 to 5.0 microsiemens on the palm side of the wrist.

Dr. Rana el Kaliouby's MindReader API is used to get confidence values for six mental states: agreeing,concentrating,disagreeing,interested,thinking, and unsure. The MindReader software is built on top of NevenVision's Facial Feature Tracking SDK which is has evolved into Google's Face Detection API. The MindReader software uses a webcam that captures at between 20-30fps at 320x240 pixels. For privacy purposes, all that is logged are the 6 derived values which are reported about six times per second.